Minghao Yin, Zhuliang Yao, Yue Cao, Xiu Li, Zheng Zhang, Stephen Lin, Han Hu

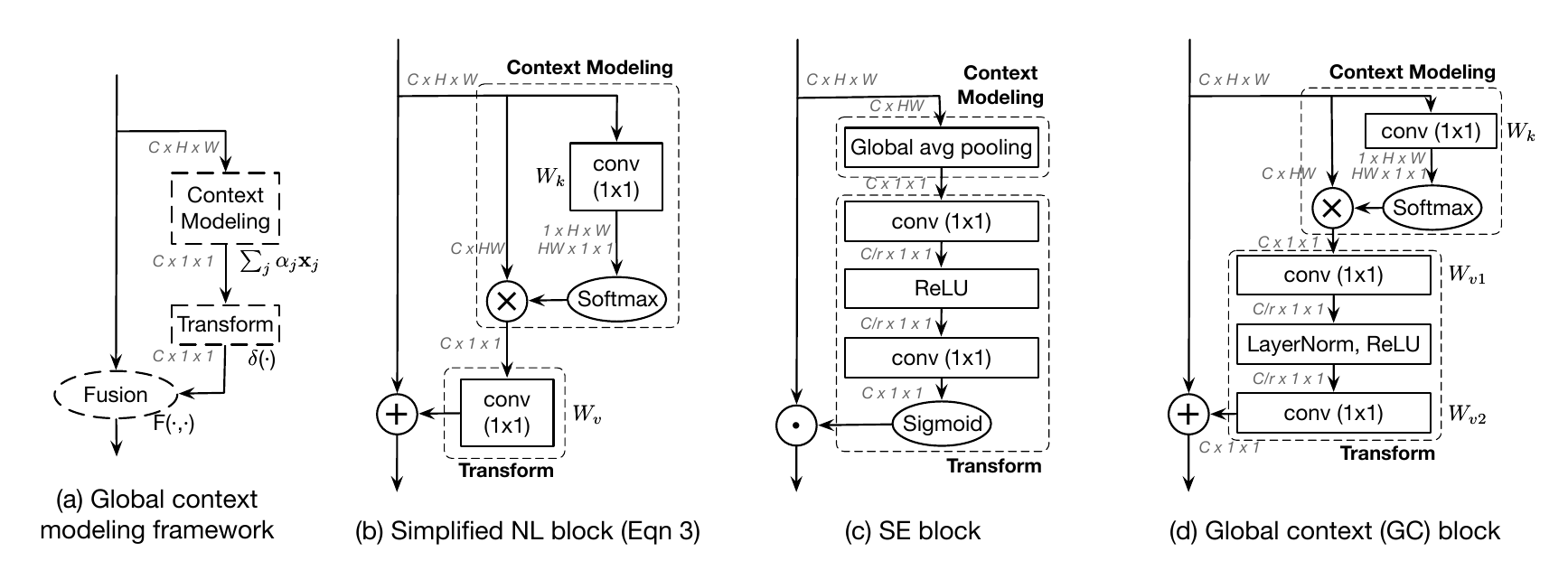

The non-local block is a popular module for strengthening the context modeling ability of a regular convolutional neural network. This paper first studies the non-local block in depth, where we find that its attention computation can be split into two terms, a whitened pairwise term accounting for the relationship between two pixels and a unary term representing the saliency of every pixel. We also observe that the two terms trained alone tend to model different visual clues, e.g. the whitened pairwise term learns within-region relationships while the unary term learns salient boundaries. However, the two terms are tightly coupled in the non-local block, which hinders the learning of each. Based on these findings, we present the disentangled non-local block, where the two terms are decoupled to facilitate learning for both terms. We demonstrate the effectiveness of the decoupled design on various tasks, such as semantic segmentation on Cityscapes, ADE20K and PASCAL Context, object detection on COCO, and action recognition on Kinetics.

从论文名称上来看,这篇论文分析了Non-Local Neural Networks中的Non-Local模块中所存在的注意力机制,并对其设计进行了解耦。解耦后该注意力分为两部分:成对项(pairwise term)用于表示像素之间的关系,一元项(unary term)用于表示像素自身的某种显著性。这两项在Non-Local块中是紧密耦合的。这篇论文发现当着两部分被分开训练后,会分别对不同的视觉线索进行建模,并达到不错的效果。

整篇论文从对Non-Local分析到新的方法提出都非常地有调理。有时间请阅读原论文Disentangled Non-Local Neural Networks。

在阅读本文之前请先阅读Non-Local Neural Networks。