Md Amirul Islam, Sen Jia, Neil D. B. Bruce

In contrast to fully connected networks, Convolutional Neural Networks (CNNs) achieve efficiency by learning weights associated with local filters with a finite spatial extent. An implication of this is that a filter may know what it is looking at, but not where it is positioned in the image. Information concerning absolute position is inherently useful, and it is reasonable to assume that deep CNNs may implicitly learn to encode this information if there is a means to do so. In this paper, we test this hypothesis revealing the surprising degree of absolute position information that is encoded in commonly used neural networks. A comprehensive set of experiments show the validity of this hypothesis and shed light on how and where this information is represented while offering clues to where positional information is derived from in deep CNNs.

Comments: Accepted to ICLR 2020

引言

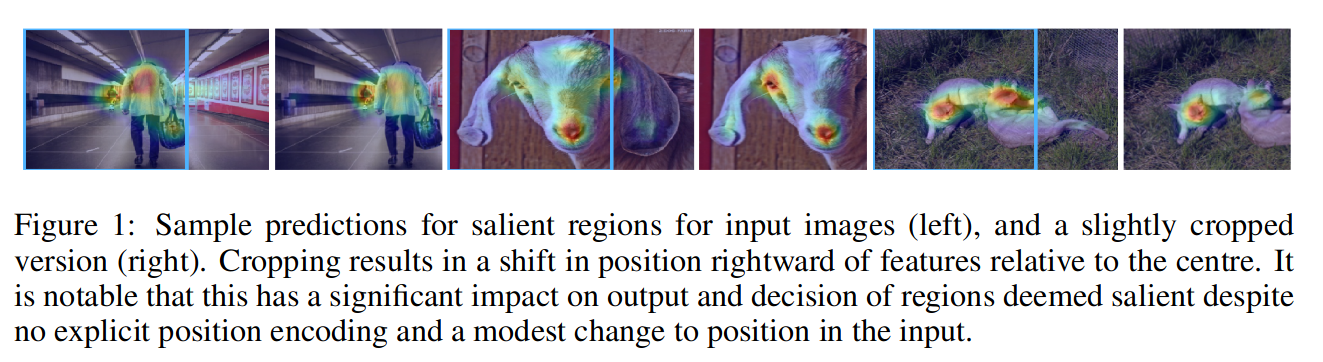

经典CNN模型被认为是spatially-agnostic的,因此胶囊网络或循环网络已被用于建模学习特征层内的相对空间关系。目前尚不清楚CNN是否捕获了在位置相关任务中重要的绝对空间信息(例如语义分割和显著对象检测)。如下图所示,被确定为最显著的区域倾向于靠近图像中心。在裁剪过图像上做显著性检测时,即使视觉特征没有改变,最显著的区域也会移动。

在这篇文中,研究了绝对位置的作用通过执行一系列随机化测试,假设CNN确实可以学习到编码位置信息作为决策线索,从而获得位置信息。实验表明,位置信息是通过常用的填充操作(零填充)隐式学习的。